Course Description

Machine learning algorithms are data analysis methods which search data sets for patterns and characteristic structures. Typical tasks are the classification of data, automatic regression and unsupervised model fitting. Machine learning has emerged mainly from computer science and artificial intelligence, and draws on methods from a variety of related subjects including statistics, applied mathematics and more specialized fields, such as pattern recognition and neural computation. Applications are, for example, image and speech analysis, medical imaging, bioinformatics and exploratory data analysis in natural science and engineering:

|

|

|

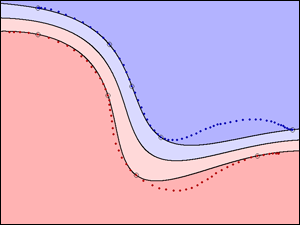

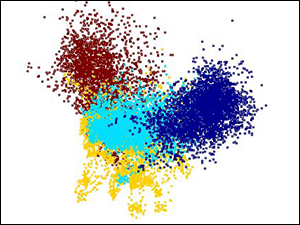

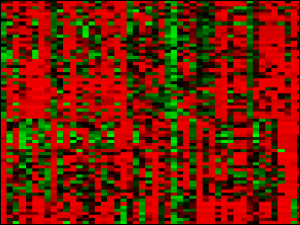

| Non-linear decision boundary of a trained support vector machine (SVM) using a radial-basis function kernel. | Fisher's linear discriminant analysis (LDA) of four different auditory scenes: speech, speech in noise, noise and music. | Gene expression levels obtained from a micro-array experiment, used in gene function prediction. |

This course is intended as an introduction to machine learning. It will

review the necessary statistical preliminaries and provide an

overview of commonly used machine learning methods. Further and more

advanced topics will be discussed in the course Statistical Learning Theory, held in the spring semester by Prof. Buhmann.

Other related courses offered at the D-INFK include: Computational Intelligence Lab, Probabilistic Graphical Models for Image Analysis, Probabilistic Artificial Intelligence, Advanced Topics in Machine Learning, Information Retrieval, Big Data, Data Mining.

News

| Date | What? |

|---|---|

| 17.01. |

As explained in the Q&A, some typos and mistakes were corrected.

|

| 24.11. |

|

| 04.11. |

|

| 02.10. |

|

| 24.09 |

|

| 17.09 |

|

| 22.08 |

|

Syllabus

Some of the material can only be accessed with a valid nethz account.

General Information

VVZ Information: See here.Time and Place

Lectures| Mon 14-15 | ETF E1 |

| Tue 10-12 | NO C60 |

| Time | Room | Last Name | Wed 13-15 | CAB G11 | A-G |

|---|---|---|

| Wed 15-17 | CAB G61 | H-N |

| Thu 15-17 | CAB G59 | O-R |

| Fri 08-10 | CHN E46 | S |

| Fri 13-15 | ML E12 | T-Z |

* All tutorial sessions are identical, please attend the session assigned to you based on the first letter of your last name

Exercises

The exercise problems will contain theoretical Pen&Paper assignments. Please note that it is not mandatory to submit solutions, a Testat is not required in order to participate in the exam. We will publish exercise solutions after one week.

If you choose to submit:- Send a soft copy of the exercise to the respective teaching assistant for that exercise (specified on top of the exercise sheet). This can be latex, but also a simple scan or even a picture of a hand-written solution.

- Please do not submit hard copies of your solutions.

Project

Part of the coursework will be a project, carried out in groups of 3 students. The goal of this project is to get hands-on experience in machine learning tasks. The project grade will constitute 30% of the total grade. More details on the project will be given in the tutorials.

Exam

The mode of examination is written, 120 minutes length. The language of examination is English. As written aids, you can bring two A4 pages (i.e. one A4 sheet of paper), either handwritten or 11 point minimum font size. The written exam will constitute 70% of the total grade.

Resources

Text Books

C. Bishop. Pattern Recognition and Machine Learning. Springer 2007.

This is an excellent introduction to machine learning that covers most

topics which will be treated in the lecture. Contains lots of

exercises, some with exemplary solutions. Available from ETH-HDB and

ETH-INFK libraries.

R. Duda, P. Hart, and D. Stork. Pattern Classification. John Wiley & Sons, second edition, 2001.

The classic introduction to the field. An early edition is available online for students attending this class, the second edition is available from ETH-BIB and ETH-INFK libraries.

T. Hastie, R. Tibshirani, and J. Friedman. The Elements of Statistical Learning: Data Mining, Inference and Prediction. Springer, 2001.

Another comprehensive text, written by three Stanford statisticians. Covers

additive models and boosting in great detail. Available from ETH-BIB

and ETH-INFK libraries.

A free PDF version (second edition) is available

online

L. Wasserman. All of Statistics: A Concise Course in Statistical Inference. Springer, 2004.

This book is a compact treatment of statistics that facilitates a deeper

understanding of machine learning methods. Available from ETH-BIB and

ETH-INFK libraries.

Matlab

The official Matlab documentation is available online at the Mathworks website (also in printable form). If you have trouble accessing Matlab's built-in help function, you can use the online function reference on that page or use the command-line version (type help <function> at the prompt). There are several primers and tutorials on the web, a later edition of this one became the book Matlab Primer by T. Davis and K. Sigmon, CRC Press, 2005.

Discussion Forum

We maintain a discussion board at the VIS inforum. Use it to ask questions of general interest and interact with other students of this class. We regularly visit the board to provide answers.

Previous Exams

Contact

Instructor: Prof. Joachim M. Buhmann

Head Assistant: Gabriel Krummenacher

Assistants: Alexey Gronskiy,

Dmitry Laptev,

Dr. Dwarikanath Mahapatra,

Nico Gorbach,

Kate Lomakina,

Pratanu Roy,

Yatao Bian,

Mario Lučić,

Darko Makreshanski,

Veselin Raychev,

Chen Chen